A lot of people have asked about the computers I used to train TorToiSe. I’ve been meaning to snap some pictures, but it’s never “convenient” to turn these servers off so I keep procrastinating.

We had some severe thunderstorms today here in the front range which forced me to shut down my servers. I took the opportunity to take some photos.

A little history to start

Building out my servers has been a long, multi-year effort. I started the process right around the time that NVIDIA launched their Ampere GPU lineup. I was fortunate to be able to grab 6 RTX 3090s right around launch time, and slowly built up my inventory one GPU at a time over the course of 2021. I’m sure no one will believe me, but I did manage to purchase every one of the 16 GPUs I own at MSRP.

I maintain two servers. The reason is that I run this whole operation as a business: one of my servers is rented out on vast.ai. This server pays the bills: electricity, component replacement, upgrades, etc. The other server is solely used for my deep learning projects. Both servers are identical except for storage and GPU count. The rental server only has 7 GPUs while my ML server has 8. My ML server also has a massive bank of SSDs to store my datasets.

It probably goes without saying, but I built and maintain these rigs myself. They were pieced together at the component level. I have always enjoyed building computers and I can honestly say that I like maintaining these rigs almost as much as I like using them to build models.

A goal from the get-go was maximum GPU density per-server. This is because without dropping serious $$$ on mellanox high-speed NICs and switches, inter-server communication bandwidth quickly becomes the bottleneck when training large models. I can’t afford fancy enterprise grade hardware, so I get around it by keeping my compute all on the same machine. This goal drives many of the choices I made in building out my servers, as you will see.

Pics

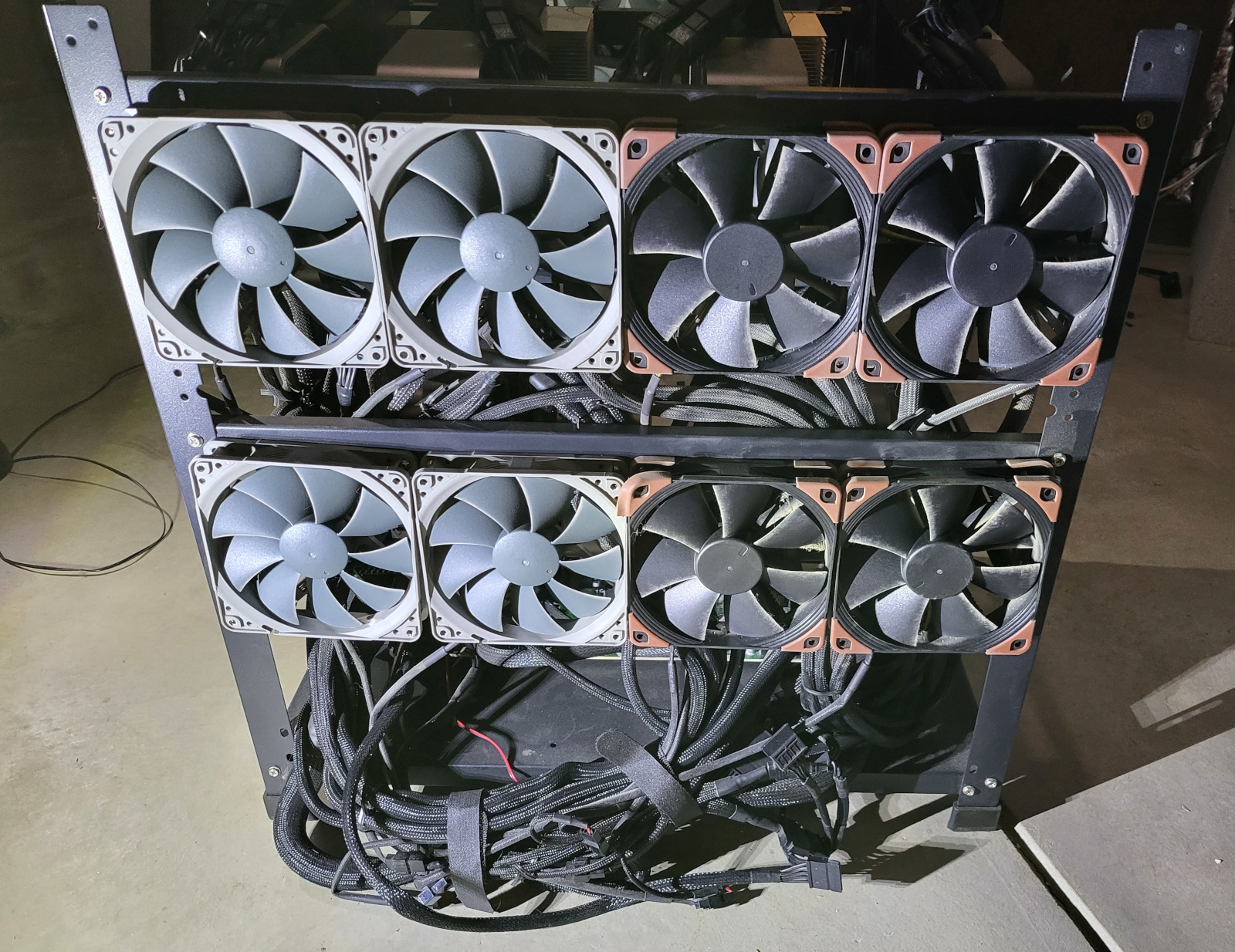

Here are some pictures of my 7 GPU rig. As stated above, the 8 GPU server is nearly identical except for a large SSD array hidden away inside.

The pictures were taken with a bright LED shining on the computer, and look far more dusty than they actually are. Don’t judge me. 🙂

Components

Lets talk specs. My deep learning server consists of:

(Note: Amazon and eBay links below are affiliate links, because either I’m a shill, or it doesn’t matter – take your pick.)

- CPU: AMD EPYC 7502

- GPUs: 8x RTX 3090

- Motherboard: Asrock ROMED8-2T

- Memory: 256GB Cisco PC4-2400T DDR4 RAM

- Storage: Samsung 970 EVO 512GB, ADATA SX8200PNP 2TB, 8x Samsung 860 QVO 8TB

- Case: AAAwave 12 GPU

- PCI-E Risers: Maxcloudon Bifurcated Risers

- PSUs: 3x EVGA 1600W G+

I’ll talk over these choices:

CPU – This was an easy choice. With the goal being GPU density, PCIe lanes were of paramount importance. EPYC has no competition in this regard. In terms of choosing core-counts: I deemed 64 cores to be excessive for my needs, and it is fairly hard to come across 24-core EPYCs. I purchased both of my 7502 CPUs used for under $1000 on eBay. I think this was a steal. The other good option is the Threadripper pro, for which the 32 core model retails at $2800. One of my EPYC’s is a retail model, the other is a QS model. I haven’t noticed any difference between the two. If you buy one of these like I did, be very careful not to buy one removed from an American server manufacturer, they are often node-locked.

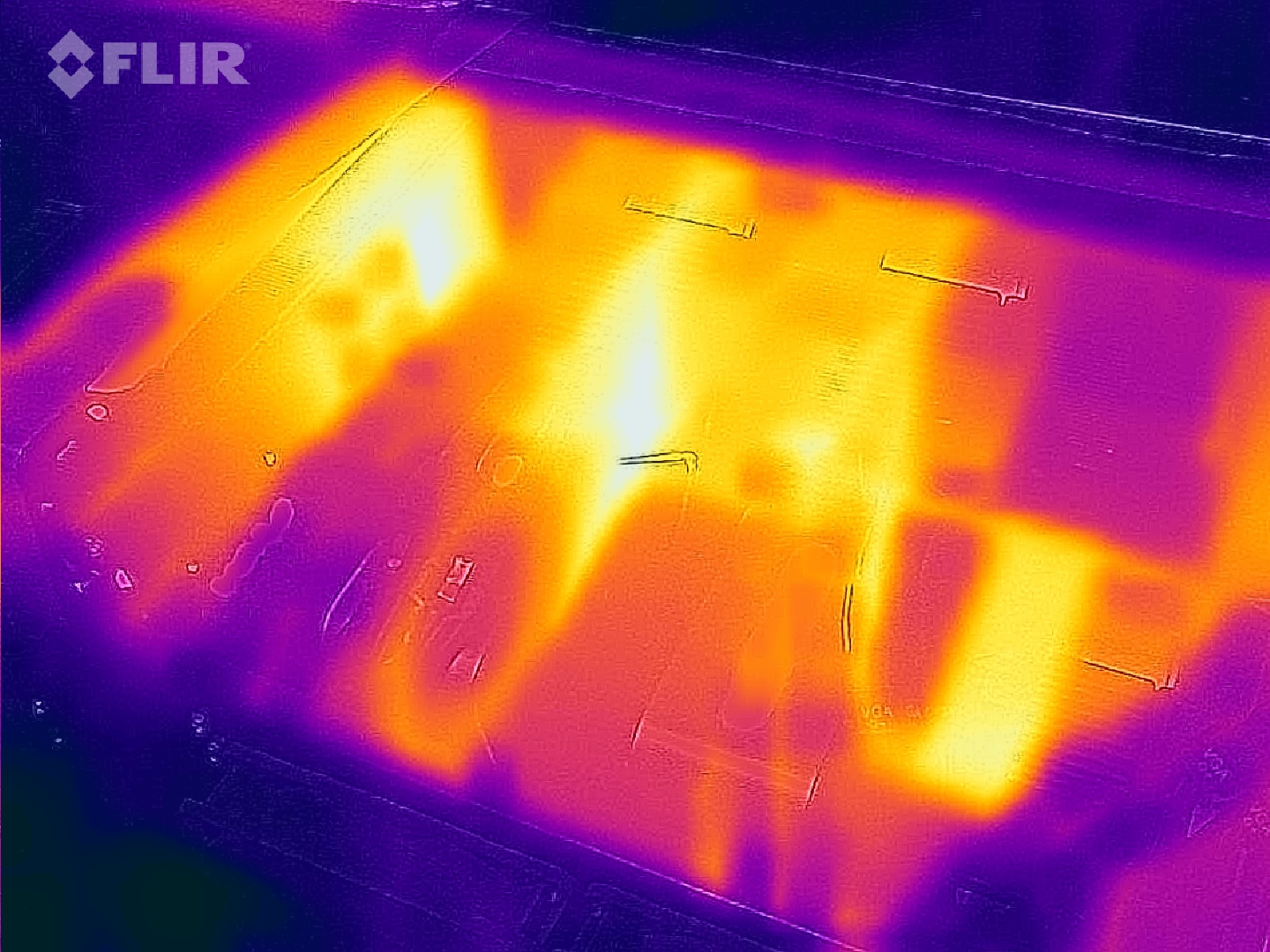

GPUs – I use RTX 3090s exclusively. I am not married to any particular version of the 3090, and my servers are a hodge-podge of the OEM NVIDIA 3090 variant and the EVGA FTW variant. The reason I only have these two is because all other manufacturers are scalping us (though I expect that to change soon..) I also like the rear-exhaust design of the OEM model, which lends itself well to multi-GPU configurations. I re-pasted and replaced the thermal pads on all of my GPUs. Both EVGA and NVIDIA did a horrible job with memory thermals and they should frankly be embarrassed with themselves. Selling $2000 GPUs that immediately go to 100% fan power and derate themselves when the memory modules hit 110C is utterly unacceptable. This is not just because I am misusing these GPUs: I have a single-GPU workstation that exhibits the same problems while gaming. Shame. I also added custom heatsinks to the backplates of the OEM 3090s where the memory modules reside. These reduce the memory temps by an additional 10C.

Motherboard – My primary goal when selecting a motherboard was to find a single-CPU board with the maximum number of possible full-width PCI-E x16 slots. Since I was bound to the EPYC CPU, this basically constrained me to the ROMED8-2T. On paper, this board has fantastic specs and was exactly what I was looking for. In practice, it has a fair number of infuriating bugs that make me wish some other players were in the market. For example, I cannot update the firmware on the board (it always errors out no matter which method I use), the IPMI is garbage, and you cannot use all of the SATA ports without doing a weird configuration hack in the BIOS which has no documentation whatsoever.

RAM – Server memory is damned expensive! The only nice thing I can say about my memory is that it was cheap. My RAM is slow and the sticks are slowly dying one at a time. This will be the next upgrade when I can scrape together the sheckels..

Storage – I have a 1TB NVME for the OS drive and a 2TB NVME to store code and model checkpoints. The latter is certainly overkill as everything vital is permanently in RAM anyways. I also have an array of 8x 8TB Samsung QVOs in RAID 5 with 1 redundant drive to store my datasets. Random access is extremely important for machine learning so HDDs were not an option. These are quite pricey these days but I picked them up for under $500/pc on a lucky black friday sale. Kinda wish I had bought some more at this point.

Case – I originally constructed my two servers in a rackmounted chassis built for mining. I had a lot of trouble with airflow and maintenance was a PITA. At some point I gave up and went for an open-air design, which is what I have stuck with. The AAAwave case I ended up with is basic and fairly cheap, and it gets the job done. I could probably fit 9 3090s total in this case, but I’d probably want to get more clever about airflow if I did so.

PCI-e Risers – This is another component that is surprisingly tricky to figure out. Because most of the GPUs use a different power supply from the motherboard, you cannot use “normal” PCI-e risers like you would find at your local computer parts store. You need to use risers with an electrically isolated PCI-e slot. I also needed risers with a fairly long length, at least 2 feet. There were two options I found for this: the first are the Comino risers. LTT featured these awhile back and they are some slick kit. Unfortunately, they have some kind of issue with 3090 cards (or I am using them wrong). I had three of them burn out at the PCI-e connector before I gave up on them permanently. Fortunately, none of them damaged any GPUs. Lately, I have been using these bifurcated risers from maxcloudon. They allow me to split my GPU slots (meaning I can support up to 14 GPUs on a single server!) and have been dead reliable for almost 6 months now. The biggest downside is that all of these risers only work with PCIE gen 3. If anyone has any tips for power-isolated gen 4 compatible risers with bifurcation support, PLEASE contact me. You will have a friend for life.

PSUs – Naive me thought that I would be able to power 4x “350W” GPUs with a single 1600W PSU. Nope. Doesn’t work, not even on 240V. A lesser-known fact about 3090s is they actually pull burst currents of up to 600W. This means that a 1600W PSU will power-on and even run 4x 3090s, but it will randomly trip the overcurrent protection when all 3090s simultaneously draw their peak power, which happens every couple of hours. This was a fun one to figure out. I now run 3 1600W PSUs, each one powering 3 GPUs (or 2 GPUs+everything else). All PSUs run on 240V circuits I had installed specifically for these servers. I have several 2400W server PSUs that I intend to move to someday, but they are too loud to be used while these servers live in my home.

Cooling & Power

When I moved past 6 GPUs, power and thermals started to become a concern for me. I housed these servers in the utility room of my basement, so ventilation was already pretty good and the heat didn’t affect my family too much, but I was worried about component life as the room was regularly sitting at 100F. I had also exhausted what two 120V outlets were able to safely give.

To solve the electricity problem, I had an electrician come in and install two 240V circuits with L6-20P sockets. You can purchase cords that are compatible with most large power supplies for these sockets on eBay. Most high-end power supplies are already dual-voltage compatible so nothing needed to be done there. Some power supplies come with switches on the back to swap between 120V and 240V so RTFM.

Heat was a bit more challenging. My utility room has two ventilation shafts built in which were passive. I opted to install two centrifugal air pumps, one hooked to each ventilation shaft to move air out of the room. I built an enclosure around the servers using cinderblocks and sheet metal. I made the enclosure air-tight and the centrifugal pumps pull air directly from inside the enclosure, establishing negative pressure behind the computers. This was highly effective at dealing with the heat problem, and the room is only a few degrees above the rest of the house now.

I want to provide an operational note that is related to thermals an power: I set the power limit on all of my GPUs to 285W, ~80% of the rated power of a 3090. From testing, this reduces performance by ~6% but greatly improves thermals.

The GPUs do run hot, however. Viewed from the front of the server, the rightmost GPUs run the hottest in the high 70s C, as reported by nvidia-smi. The leftmost operate in the 60s. I justify this by remembering that my A5000 workstation GPU runs at 85C at all times, despite having excellent airflow.

A practical guide to using multiple PSUs

Figuring out how to power these servers with multiple PSUs was by far the most nerve-wracking part of the build. If you attempt to do some research about going this route, be prepared to read commentary from hundreds of self-professed electrical engineers telling you “do not, under any circumstances do this!“

Being the headstrong and reckless guy that I am, I did it anyways. My line of reasoning was that if I isolated the PSUs into separate “circuits” that only got joined at the ground and data lines, I probably wouldn’t have to worry too much about them fighting with each other and causing failures. I built out these “circuits” using the maxcloudon PCIe powered risers, which allow me to fully power on 3x GPU “subsystems” with each 1600W power supply. All “circuits” are tied at the ground.

One extremely strange issue I ran into at one point was that one of my servers became what I can only describe as “electrically tainted”. If this computer was plugged into a network switch, the switch stopped routing traffic and the entire network behind that switch went dead. The computer was still operating fine with an attached keyboard and monitor. After a lot of experimentation, I isolated the problem to a single power supply “circuit” on the computer. If this circuit (and the attached GPUs) was disabled, the problem disappeared. The only unique thing about this circuit is that it was powered by a different PSU, a corsair AX1600i while the rest of the circuits used EVGA Golds. On a whim, I swapped in a spare 1600 gold and the problem disappeared. Since then, when combining PSUs, I have only used PSUs from the of the same make and model.

Past the above issue, I have not had any other problems in 2 years of near-continuous operation for both servers. Does this mean you should do it? Probably not. I’m not an EE and this is some expensive equipment. You make your own risk calls.

Why am I not using <x> GPU?

Because, frankly, the 3090 is a very nice sweet spot for someone like me looking to balance cost and performance. A little known, but fun fact is that it actually has better FP32 performance than even the A100. FP16 performance is dogshit due to software locks NVIDIA put in place. There is little reason to use FP16 except for the small amount of VRAM savings. That’s fine, it means I don’t have to worry about numeric stability. 🙂

I have considered building out a A6000 server. 48GB of VRAM would be quite nice, and fully unlocking FP16 would be great as well. I also prefer the cooling solution on the A6000 (though knowing about the shit NVIDIA pulled with the 3090, I’m sure the thermals are atrocious). It’s honestly a cost problem at this point. An 8x A6000 server will cost me somewhere in the realm of $40k, maybe a little less if NVIDIA gave me some research / SMB discounts. That’s more than both of the servers I currently own cost combined. Just for the GPUs. It’ll be cool if I could scrape that kind of dough together at some point, but for now I’m stuck to consumer GPUs.

Wrapping up..

I hate that high performance computing has become synonymous with the hyperscalers and that most people don’t even consider building out their own hardware anymore. Building computers is super cool and more people should do it. It may even become relatively cheap to do so as miners start liquidating their assets after ETH 2.0 goes live. I hope this helps out others who are interested in building deep learning rigs.

One other thing: I hesitated somewhat posting this out of concerns of becoming a target for robbery. To any would be thieves out there: my computers will soon be relocated to a new industrial space so that I can continue building out my operation. Please stay away from my home.