In machine learning research, there is often a stated desire to build “end to end” training pipelines, where all of the models cohesively learn from a single training objective. In the past, it has been demonstrated that such models perform better than ones which are trained from multiple components, each with their own loss.

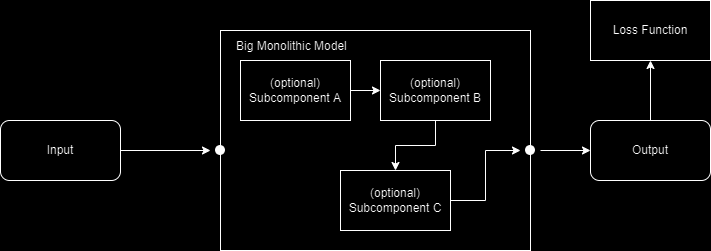

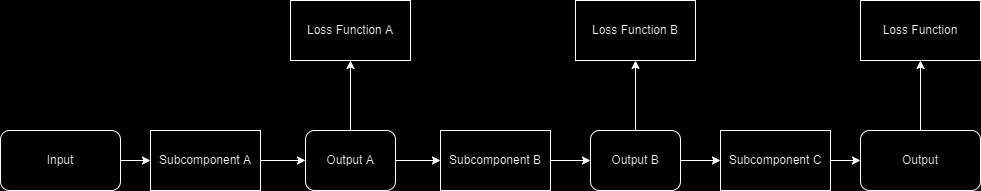

The reasoning behind this notion is sound: every time you break up a model into different parts, you must necessarily introduce a new lossy medium:

The prevailing theory is that these losses build up and produce an altogether inferior model at the end of the pipeline.

I am not going to try to convince you that models trained separately from different losses (which I’ll call “composite models” in this post) are better than those trained end-to-end. Instead, I want to make the case for when they should be used.

An few analogies

A similar debate to this occurs across many engineering fields:

In software, we debate the virtues of “monolithic architectures” versus “microservices”. Monoliths are giant programs where all of the code runs inside of a single process. They are efficient and easy to reason about, but are an utter PITA to maintain and reuse. Microservice architectures, on the other hand, are the opposite. They incur communication costs across processes (or servers!). They are often challenging to understand how they work at a deep technical level because the logic is so spread out. On the other hand, it is far easier to maintain a microservice by breaking up teams into small responsible groups. Likewise, they can often be re-used or upgraded independently from each other.

An analog also exists in mechanical engineering, where the debate is about how many parts should be used to build an end product. It is well known that you can reduce weight and make stronger structures by getting rid of fasteners and sub-assemblies. For example, instead of building a rocket fuel tank from two shells that are then bolted together and sealed, it is much more efficient to form the entire tank as a single piece. Doing so, however, comes at a cost:

- The manufacturing equipment required to build large components is expensive.

- One-piece components are often non-repairable and must be replaced wholesale.

- Upgrades similarly mean scrapping the entire component.

- One-piece components are often application-specific and cannot be re-used.

Do you see the parallels?

I’m don’t really consider myself “all-in” on either the “microservices” or “monolith” debate. I’ve seen really bad designs on either side. I think the best approach is some middle ground and the best engineers are the ones that can perform a good balancing act between the two.

Bringing this back to ML

As ML becomes more of an engineering discipline, I think it is healthy to start paying attention to this debate when architecting our models.

I want to talk really quick about Tortoise, which is a composite model built from 4 components: the AR model, the diffusion decoder, CLVP and the vocoder.

There probably is a world where Tortoise could be built as a monolith. Here’s what you would do: build a single AR model that predicts a probability distribution over SoundStream codes.* Your sequence lengths would be huge, so you’ll probably need a few TB of GPU memory and a few orders of magnitudes more processing cores.** Oh, and you got rid of CLVP re-ranking so you’ll probably want to increase the model dimension to better fit your dataset (better increase the size of that too!). You’ll need to multiply your GPU memory and cores by that increase in d.

The problem with the above approach is obvious: it’s not computationally feasible with the technology we have available to us today. When building Tortoise, my primary consideration was “how do I take audio data sampled at 22kHz and apply large transformer models to it efficiently?”. The composite model architecture I ended up building was my direct solution to that question. The vocoder, diffusion model and CLVP are all ways to reduce the need for infeasible amounts of computation at the AR model level.

A really neat result of this design decision is that you get a lot of fun, added benefits:

- Tortoise’s modular components all have a use. The diffusion model can be used as a decoder for highly-compressed speech, for example.

- I can “upgrade” each component separately from the others (kind of). I did just this with CLVP2.

- Problems can often be isolated to the component level and fixed in place, which is more than you can say for many (most?) ML models.

* Some of you are probably thinking “hey, you used SoundStream – that’s not e2e”. You’re right. The true e2e solution would be a diffusion model over waveform samples. My rough estimate is that would require more compute than exists on the planet right now. Good luck!

** Unless you use PerceiverAR, although doing so comes with it’s own set of compromises and is certainly less performant than a fully dense model.

Some good rules of thumb

While it’s clear that I think building composite models is at least worthy of consideration, there are definitely some dos and don’ts. Here are a few personal guidelines I can come up with:

- Do break up models to reduce spatial complexity. My opinion is that current ML models settle for way too much spatial complexity. A big image generator shouldn’t be throwing huge vectors at each pixel.

- As a related note, I think it is insane that we are throwing 12k wide vectors at ~words in todays LLMs when we have concurrently proven that 768 wide vectors can sufficiently represent an entire sentence.

- Do break up models to concentrate model parameters where they are needed. For example, the greatest variance within music happens at the lyrical level. If I wanted to build a music generator, I’d throw my biggest model at the task of generating lyrical structure and smaller models everywhere else.

- Do make the operating medium between two models as descriptive as possible. Discrete codes are the worst. Latent vectors are pretty good but tough to reason about. Compacted, descriptive mediums like spectrograms are the gold standard.

- Don’t break up models by semantic classes. An example would be training separate image recognition models for cats and dogs. You don’t “think” in the same way that ML models do and you need to let them learn how to build their own semantic understandings.

- Don’t break up models without each having a distinctive purpose. An example would be building two models, each with 1B parameters, which operate sequentially. You might believe the result would be as good as a 2B parameter model. In most cases you’d be wrong. Most parameters in both models would more than likely learn the exact same things. Use a bigger model and model parallelism instead.