SRFlow is a really neat adaptation of normalizing flows for the purpose of image super-resolution. It is particularly compelling because it potentially trains SR networks with only a single negative-log-likelihood loss.

Thanks to a reference implementation from the authors or the paper, I was able to bring a trainable SRFlow network into DLAS. I’ve had some fun playing around with the models I have trained with this architecture, but I’ve also had some problems that I want to document here.

First of all – the good

First of all – SRFlow does work. It produces images that are perceptually better than PSNR-trained models and don’t have artifacts like GAN-trained ones. For this reason, I think this is a very promising research direction, especially if we can figure out more effective image processing operations that have tractable determinants.

Before I dig into the “bad”, I want to provide a “pressure relief” for the opinions I express here. These are not simple networks to train or understand. It is very likely that I have done something wrong in my experiments. Everything I state and do is worth being double-checked (and a lot of it is trivial to do so for those who are actually interested).

The Bad

Model Size

SRFlow starts with a standard RRDB backbone, and tacks on a normalizing flow network. This comes at significant computational cost. RRDB is no lightweight already, and the normalizing flow net is much, much worse. These networks have a step time about 4x what I was seeing with ESRGAN networks. It is worth noting that reported GPU utilization while training SRFlow networks is far lower than I am used to, averaging about 50%. I believe this is due to inefficiencies in the model code (which I took from the author). I was tempted to make improvements here, but preferred to keep backwards compatibility so I could use the authors pretrained model.

Aside from training slowly, SRFlow has a far higher memory burden. On my RTX3090 with 24G of VRAM, I was running OOM when trying to perform inference on images about 1000x1000px in size (on the HQ end).

Artifacts

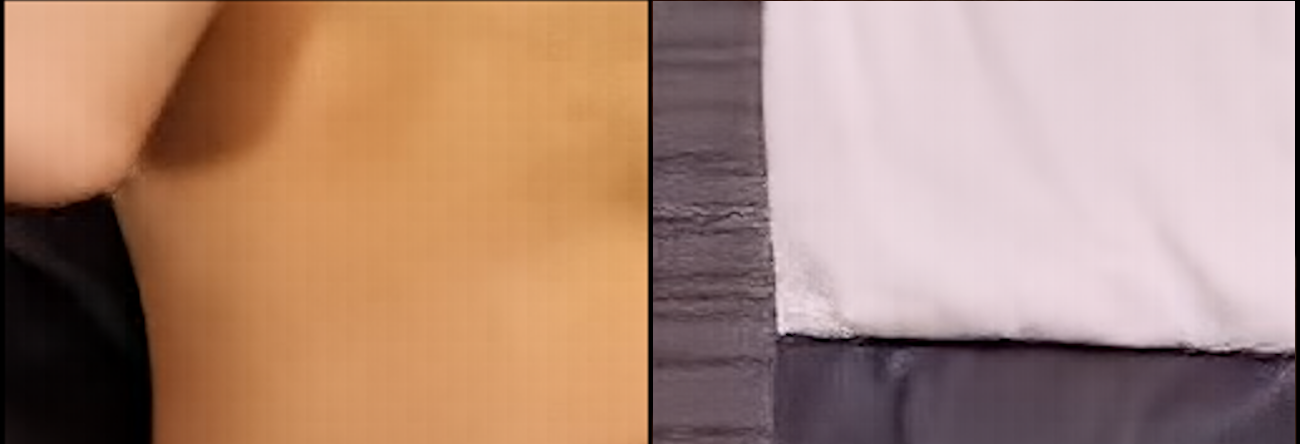

While SRFlow generally produces aesthetically pleasing results, every trained model I have used generates subtle blocky artifacts. These artifacts are most visible in uniform textures. Here is are two good examples of what I am talking about:

I have encountered these artifacts in other generative models I have trained in the past. They result from 1×1 convolutions which cannot properly integrate small differences in latent representation that neighboring pixels might contain. Unfortunately, SRFlow can only use 1×1 convolutions because these are the only type of convolution which are invertible.

Technically speaking, there is no reason why we could not eliminate these artifacts using additional “consistency” filters trained on the SRFlow output. I think it is worth knowing about them, though, since they point at a deeper problem with the architecture.

The SRFlow architecture currently has poor convergence

This one is a bit more complicated. You first need to understand the objective function of normalizing flows: to map a latent space (in this case, HQ images conditional on their LQ counterparts) to a distribution indistinguishable from gaussian noise.

To show why I think that SRFlow does a poor job at this, I will use the pretrained 8x face upsampler model provided by the authors. To demonstrate the problems with this model, I pulled a random face from the FFHQ dataset and downsampled it 8x:

Original:

LQ:

I then went to the Jupyter notebook found in author’s repo and did a few upsample tests with the CelebA_8x model. Here is the best result:

Note that it is missing a lot of high frequency details and has some of the blocky artifacts discussed earlier.

I then converted that same model into my repo, and ran a script I have been using to play with these models. One thing I can do with this script is generate the “mean” face for any LR input (simple really, you just feed a tensor full of zeros to the gaussian input). Here is the output from that:

So what you are seeing here is what the model thinks the “most likely” HQ image is for the given LQ input. For reference, here is the image difference between the original HQ and the mean:

Note that the mean is missing a lot of the high-frequency details. My original suspiscion for why this is happening is that the network is encoding these details into the Z vector that it is supposed to be converting to a gaussian distribution. To test this, I plotted the std(dim=1) and mean(dim=1) of the Z vectors at the end of the network (dim 1 is channel/filter dimension):

Mean:

Std:

In a well trained normalizing flow, these would be indistinguishable from noise. As you can see, they are not: the Z vector contains a ton of structural information about the underlying HQ image. This tells me that the network is unable to properly capture these high frequency details and map them to a believable function.

This is, in general, my experience with SRFlow. I presented one image above, but the same behavior is exhibited in pretty much all inputs I have tested with and extends to every other SRFlow network I have trained or work with. The best I can ever get out of the network is images with Z=0, which produces appealing, “smoothed” images that beat out PSNR losses, but it is misses all of the high-frequency details that a true SR algorithm should be creating. No amount of noise at the Z-input produces these details: the network simply does not learn how to convert these high frequency details into true gaussian noise.

It is worth noting that I brought this up with the authors. They gave this response to my comments, which provides some reasons why I may be seeing these issues. I can buy into these reasons, but they point to limitations with SRFlow that render it much less useful than other types of SR networks.

Conclusion

I think the idea behind SRFlow has some real merit. I hope that the authors or others continue this line of research and find architectures that do a better job converging. For the time being, however, I will continue working with GANs for super-resolution.